Security & Safety for the AGENTIC AI Age.

CONTINUOUS AI RED TEAMING

Ascend AI delivers continuous red teaming for agentic AI applications, using purpose-built offensive models to uncover vulnerabilities with the highest attack success rate in the industry. It automatically maps system context, probes multi-stage agent behavior, and finds failure paths that other security tools miss.

Runtime AI Guardrails

Defend AI autonomously enforces real-time guardrails that protect agentic applications with low latency and high accuracy. Its detection and blocking models outperform frontier LLMs, stopping evasions, unsafe tool use, and harmful actions before they execute.

Watch our protection in action.

Ascend AI

Ascend AI delivers continuous, automated red teaming that surfaces deep vulnerabilities in agentic applications before attackers can exploit them.

use cases

- Comprehensive red teaming of AI and agentic applications, including tools, MCP servers, RAG, and real-time UI workflows with WebSocket support.

- Pre-production risk evaluation with token-refresh resilience for long-running assessments.

- Compliance readiness for NIST AI RMF, NIST 800-204D, OWASP LLM Top 10, EU AI Act, HIPAA, PCI.

- Governance and standardization of AI testing across multiple teams and business units.

- Targeted assessment with custom BYO-prompts for regulated or domain-specific workflows.

Features

- One-click, continuous red teaming across every layer of the AI app: model, RAG, tools, MCP servers, agent orchestration, systems of record.

- Attack engine powered by fine-tuned offensive LLMs, multi-turn strategies, LAVA attacks, typoglycemia, identity exploitation, foreign language testing, and more.

- Redesigned single-app UI for PoCs with focused navigation, downloadable prompts with time filters, and table/stream split views.

- Multilingual attack coverage for international agentic workflows and AI applications.

- Integration-ready with Portkey, Lean APIs, and audit logs for enterprise review and IR workflows.

Benefits

- Identify deep AI and agent vulnerabilities that traditional AppSec tools cannot detect.

- Surface attack success patterns early to prevent real incidents in production.

- Dramatically reduce manual pen-testing effort through automated multi-turn agentic exploits.

- Maintain continuous compliance and build audit-ready evidence automatically.

- Ship AI features faster with confidence in security, safety, and grounding.

Defend AI

Defend AI delivers real-time, autonomous protection for agentic applications with guardrail models that detect and block threats faster and more accurately than frontier LLMs.

use cases

- Real-time guardrails for agentic applications, preventing data leakage, hallucinations, tool abuse, RCE patterns, and prompt injection.

- Protection and guardrails for real-time and streaming agentic apps.

- Enterprise-wide enforcement of AI safety, security, and compliance across multiple apps and teams.

- Global low-latency deployments, including regional clusters like Seoul for APAC workloads.

- Incident response support via downloadable prompts, audit logs, and full conversational traces.

Features

- Comprehensive guardrails for security, safety, grounding, tool manipulation, MCP exploitation, and malicious user behavior.

- Fast, fine-tuned detection engine with sub-second latency and frontier-beating accuracy.

- Privacy-preserving guardrails with isolated data paths and federated-learning options.

- Multi-modal detection support and multilingual coverage for global teams.

- Inline blocking, response shaping, sanitization, and developer-controlled enforcement via API/SDK, eBPF Sensor, AI Gateway, or Proxy.

Benefits

- Stop harmful or insecure AI behavior instantly without degrading user experience.

- Maintain strict compliance and data protection standards with real-time enforcement.

- Reduce workload on SecOps through high-accuracy detections that avoid alert fatigue.

- Gain end-to-end visibility into AI decisions, prompts, tool calls, and user interactions.

- Build trust in production AI by preventing failures before they impact customers.

Advanced AI Engine

Finds What Others Miss

Fine-tuned, agentic-native models deliver industry-leading detection and minimal false positives so teams can rely on every decision.

Stops AI Threats

Sub-second guardrail and detection performance designed for real production workloads without slowing users or agents.

Protect Your Data

Enterprise-grade privacy, isolated data paths, and adaptive guardrails that continuously improve without human tuning.

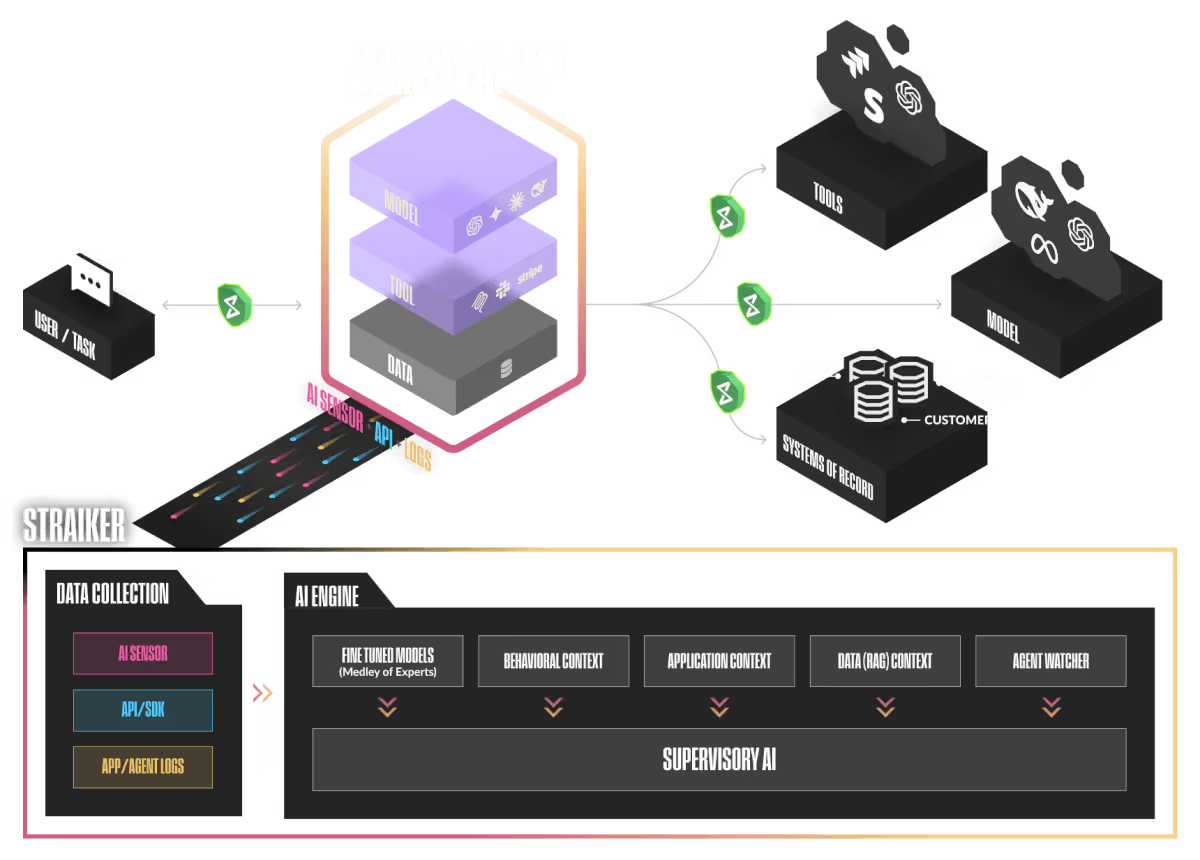

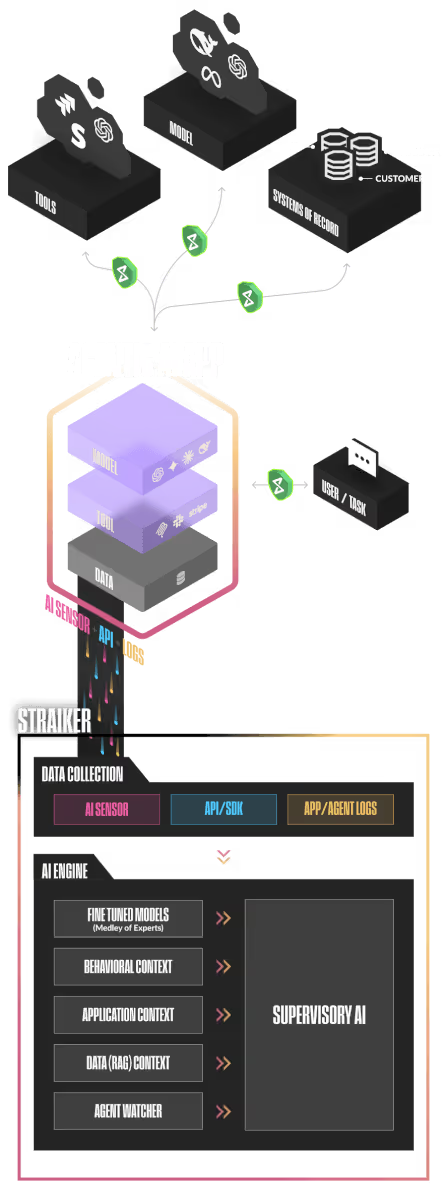

AI-Native Security Architecture Built for Agentic Applications

Straiker's multi-layered architecture collects deeper signals, reasons across full agent context, and powers the most accurate red teaming and runtime protection for agentic AI applications.

Together, these layers enable Straiker to see the full behavior of your agentic applications, uncover vulnerabilities early, and stop harmful actions in real time.

Application Flexibility

Works across any model, agent framework, or RAG system.

Straiker integrates with every type of AI or agentic application, regardless of your models, vector databases, orchestrators, or infrastructure. You get full security coverage without redesigning your stack.

Deep Signal Collection

Multiple insertion points for complete AI and agent context.

Straiker supports gateways, SDKs, OTLP, eBPF sensors, and thin-client proxies to capture user, network, tool, and agent signals. This multi-layer collection gives us the deep context required for accurate vulnerability discovery and high-fidelity runtime guardrails.

AI Security Engine

MoE + RLHF models trained to detect, exploit, and stop agentic vulnerabilities.

Straiker's AI engine uses fine-tuned models, MoE routing, and reinforcement learning to reason across the complete AI and agent stack. It powers both continuous red teaming and real-time protection with unmatched accuracy and low-latency performance.

OUR ELITE STRAIKER AI RESEARCH (STAR) TEAM

Our dedicated Al security research team performs cutting-edge research on Al models and agentic applications, as well as tactics employed by adversaries.

Securing the future, so you can focus on imagining it

Get FREE AI Risk Assessment

.avif)